Power up your experimentation programme with the Fresh Egg CRO maturity model

Conversion services | 11 MIN READ

Since William Gosset discovered the T-distribution in the early 1900s, laying the foundations for modern testing statistics, the practice of controlled experimentation has evolved considerably. The range of tools, resources and published literature has grown exponentially in the last ten years alone. However, business practices have broadly failed to keep pace. Decision-making isn’t as data-led as execs would have you believe, and supposedly “agile” development roadmaps leave no room for failure and learning. CRO teams have the potential to change that, but they face an uphill battle.

Maturity models help us systematically evaluate working methods. With them, we can benchmark and improve practices; each step across the matrix reflects a new level of sophistication.

Mike Fawcett, Conversion Services Director

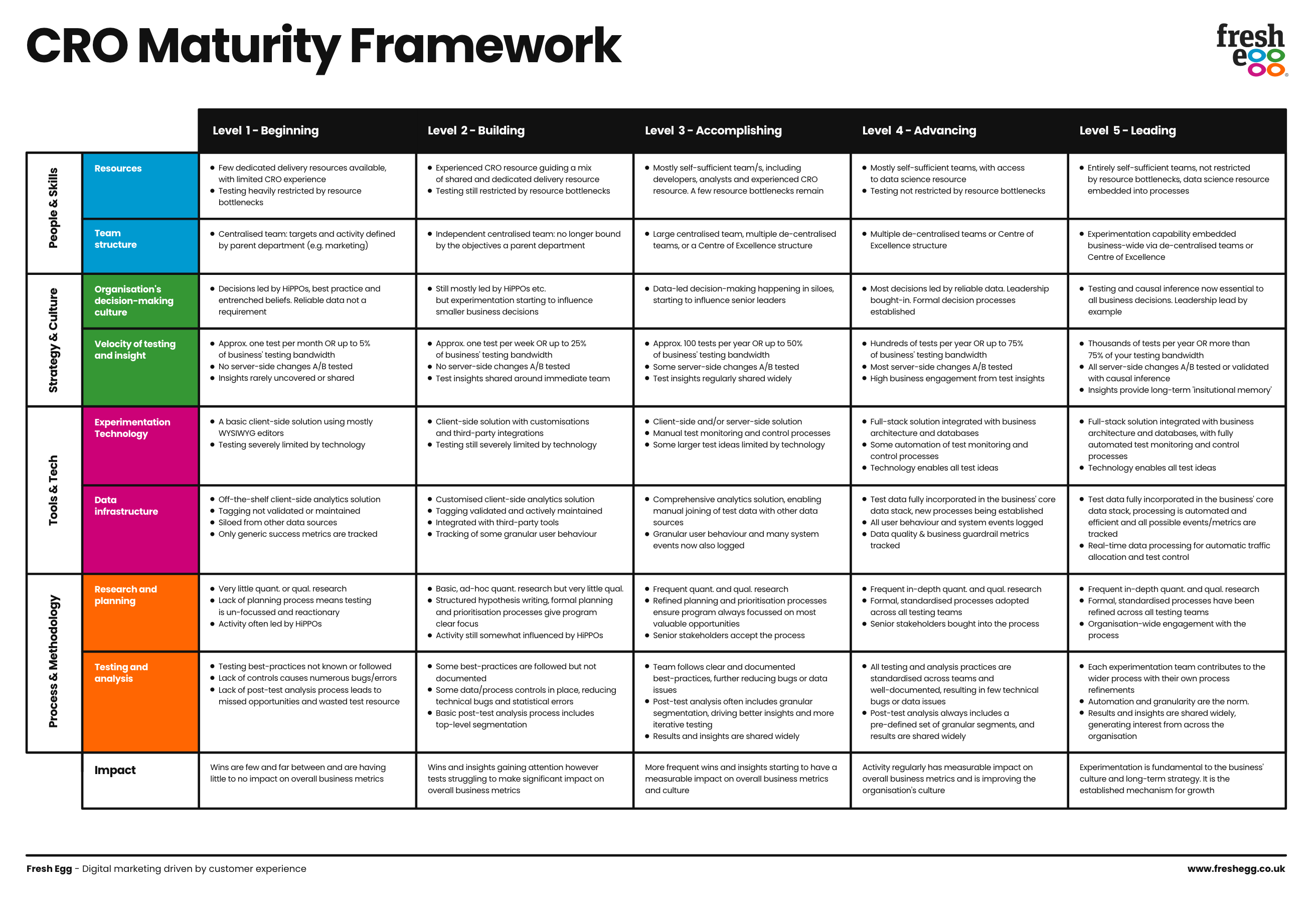

The following article will study how market leaders solve these challenges. Using the Fresh Egg CRO Maturity Model, we'll help you plot a course towards maturity. We can help you power up your experimentation programme and business with its accompanying (and downloadable) CRO Maturity Audit framework.

Learn how to improve your experimentation programme

Watch members of our conversion team explain what you need to know to understand your CRO maturity level and improve experimentation and performance.

What is a maturity model, and what are its drawbacks?

In the late 1980s, the US Department of Defence needed a better way to evaluate their software contractors, so they developed the Capability Maturity Model for software (CMM). It’s one of the earliest examples of a maturity model and helped establish the format.

Maturity models help us systematically evaluate working methods. With them, we can benchmark and improve practices; each step across the matrix reflects a new level of sophistication. Unfortunately, the process relies on human instruments and all too often, maturity is in the eye of the beholder.

The designers of the CMM complained, “the questionnaire was too often regarded as ‘the model’ rather than as a vehicle for exploring process maturity issues” [1]. It’s a challenge that businesses still feel today, as leaders at Microsoft, Booking.com, and Outreach.io summarised nicely in an article, and a subsequent conference paper, titled “It Takes a Flywheel to Fly”[2]. In it, they say:

“A Maturity Model has the right ingredients, yet what you cook with them and in what way determines how successful the evolution will be.”

No two businesses are exactly alike.

The needs in each phase of a company’s growth will change, so there’s no one-size-fits-all measure of good or bad. It will take a more tailored approach to figure out where you are and what you need to do to achieve the evolution mentioned above. We believe our in-depth CRO Maturity Audit - with its comprehensive survey, collaborative workshops, and detailed action planning - helps resolve this.

What does “CRO Maturity” mean?

It can be challenging to imagine how a mature organisation operates unless you've seen real "test-and-learn" principles in action. In the following sections, we'll try to understand what CRO maturity means by digging into what those high-flying businesses do that others don't. Included here are many themes from our CRO Maturity Framework.

Maturity is about decision-making culture

"Making decisions is easy — what's hard is making the right decisions."

Martin Tingley, Head of Experimentation Analysis at Netflix

Every business claims to be 'data-driven', which usually means reading spreadsheets like tea leaves. Mature businesses have an arsenal of statistical methods that make their data meaningful so that every department can use it. This approach ensures their decisions are consistent and objective. In his article' Decision Making at Netflix'[3], Martin Tingley discusses some of those: "A/B testing, along with other causal inference methods like quasi-experimentation[4] are ways that Netflix uses the scientific method to inform decision making."

Fig. 1 Companies well-known for their scientific decision-making practices

Rather than trusting HiPPOs (Highest Paid Person's Opinions) or committee groupthink to make decisions, mature businesses create an environment where evidence is the top dog. The principle informs every critical decision and often enshrines official company literature., According to Netflix's day-to-day working culture, all employees are encouraged to "disagree openly" (regardless of who they contradict) as long as they build opinions on evidence.

Of course, this culture requires a certain amount of what Google calls "psychological safety,[5],"i.e. feeling safe enough to ask 'stupid questions', take risks and disagree with your boss. Google's DORA research programme identified three "cultural archetypes"[6] that characterise businesses: "Pathological" (based on power and status), "bureaucratic" (governed by rules), and "generative" (driven by performance). Unsurprisingly, they found high-trust, generative cultures are most predictive of high performance. While interesting test results can breed more curiosity and trust in data, business-wide culture is almost impossible to change from the bottom up. This point is where leadership comes in.

Maturity is about leadership

"Leaders cannot just provide the organisation with an experimentation platform and tools. They must provide the right incentives, processes, and empowerment for the organisation to make data-driven decisions."

Kohavi, Tang, Xu, Trustworthy Online Controlled Experiments 2020 [10]

Senior leaders are responsible for setting standards. Their decision-making style will be a template for other decision-makers throughout the business. For example, a leader with little exposure to the sometimes counter-intuitive results of A/B tests will most likely overestimate their ability to make good decisions without supporting evidence (as per the Dunning-Kruger effect)[7]. They will probably fall back on entrenched beliefs, best practices and flawed logic, e.g. "our competitors are doing X, so that must be good". Reliance on these tropes will eventually create a hostile environment for new ideas, where teams have knee-jerk reactions to any thoughts that contradict existing beliefs, even those with compelling evidence (see the Semmelweis Reflex)[8].

In contrast, a leader with plenty of exposure to experimentation will know that evidence drives good decisions and that entrenched beliefs are the enemy of progress. As such, they'll embed this mindset in the DNA of their company - see Netflix's cultural values[9] again.

"Three of the cultural values of Netflix are curiosity, candour, and courage. Netflix encourages new employees to challenge conventional wisdom when they join the company."

Gibson Biddle, ex-Chief Product Officer at Netflix[16]

Unfortunately, if your leaders aren't already bought into this way of thinking, your path to maturity will be as much about winning their hearts and minds as winning tests.

Maturity is about velocity

"If you have to kiss a lot of frogs to find a prince, find more frogs and kiss them faster and faster."

Mike Moran, Do It Wrong Quickly (2007)

In their book, Trustworthy Online Controlled Experiments[10], Ron Kohavi (Airbnb & Microsoft), Diane Tang (Google) and Ya Xu (LinkedIn) suggest a fully mature business should be running "thousands of tests per year". This notion supports the old Silicon Valley adage that companies should fail fast and fail often, but most businesses don't have the traffic or infrastructure to support that many tests. Rather than focussing on exact numbers, one should see velocity as a bandwidth challenge. It's a question of how you can maximise your traffic and resources to create maximum business impact. In the 1,000 Experiments Club podcast, Lukas Vermeer of Vista (formerly Booking.com) describes velocity as a tangential objective. He says, "the goal should be to run experiments and have a lot of impact on the company's future direction".

Ask yourself how many tests you could run if:

- You were constantly exposing all your traffic to experiments

- Non-technical users from all around the business could quickly set up, run and analyse simple experiments

- You could A/B test back-end changes, like new pricing or product features, as well as design changes

- Your product/development teams used A/B testing (or other inference techniques) to measure the impact of all their changes

- Manual test processes were automated or refined. Everyone in the business understood experimentation as a critical growth-driver

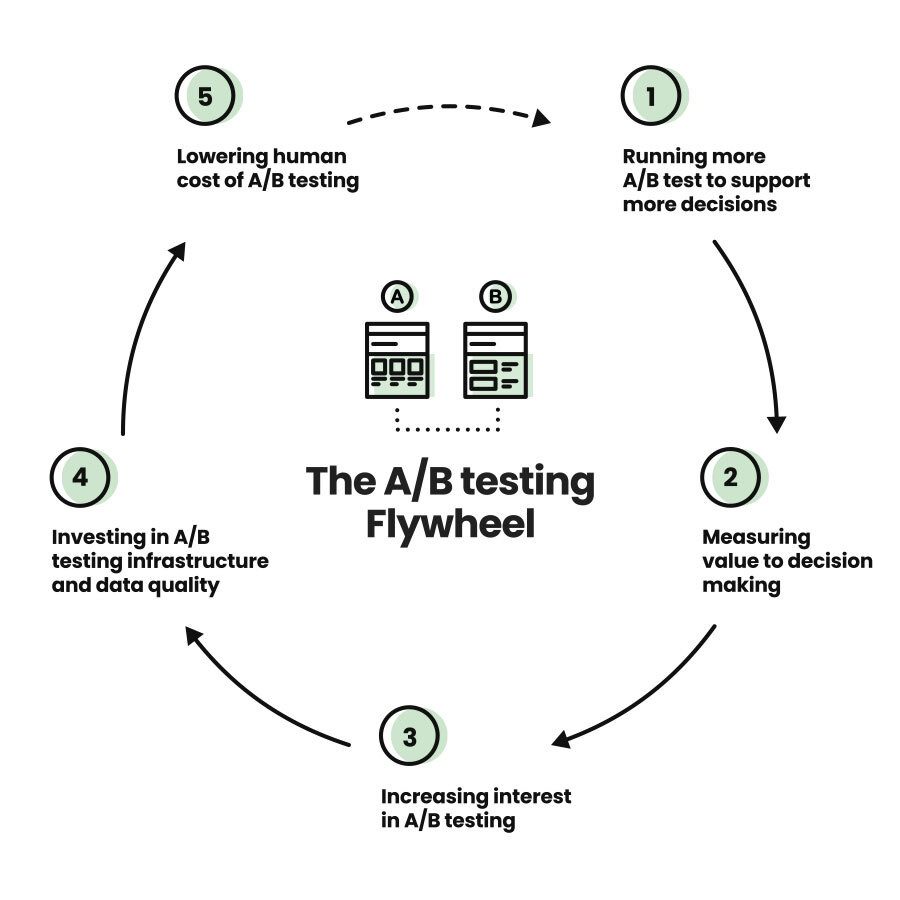

As you'll see from the flywheel analogy referenced later in this article, velocity is part of a positive feedback loop where velocity drives maturity and vice versa. Getting into that virtuous cycle in the first place is often the biggest challenge. For that, you'll need technology.

Maturity needs integrated technology and data

“You cannot create a modern experimentation programme with tools and systems designed for a different era.”

Davis Treybig[11]

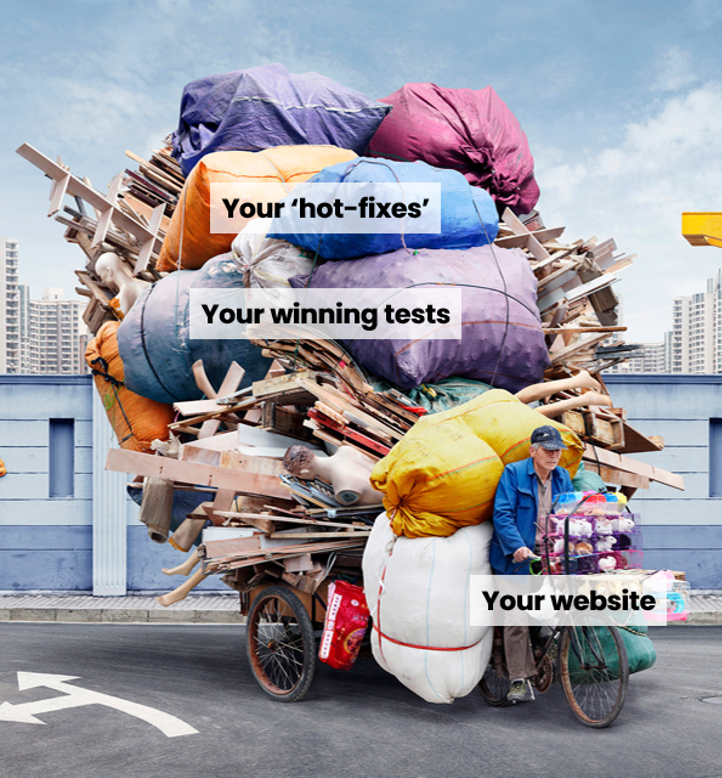

Every CRO professional has experienced the pain of integrating testing tool A with analytics tool B, let alone trying to align third-party data with first-party business MI. On top of that, teams inevitably want to test bigger and bolder changes not possible through their client-side* testing tool. Success breeds higher expectations, with teams forced to "hack" around these limitations without investment in server-side tech. CRO teams also find themselves running numerous 'hot fixes' and winning tests at 100% via their testing tools, leaving their websites teetering like a delicately stacked house of cards thanks to their ability to implement changes quickly. If this sounds familiar - you're not alone.

Alain Delorme - Totems

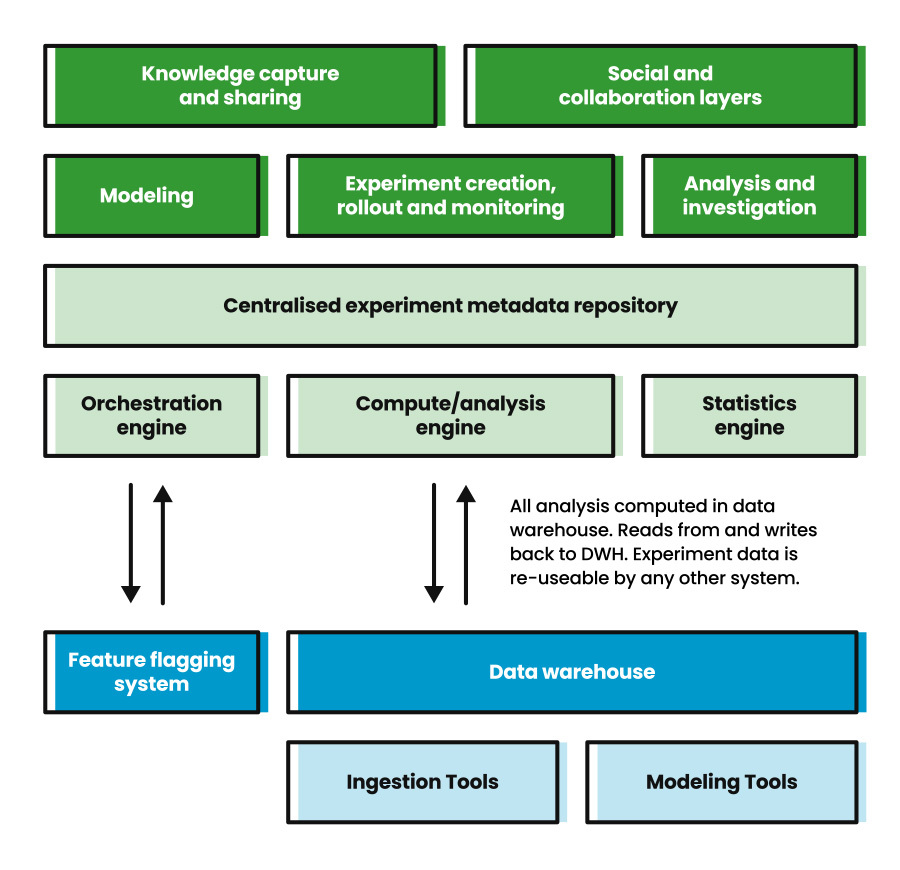

While mainstream A/B testing and analytics tools can deliver exceptional value, many lack the functionality for the needs of a mature business. When you read the technical blogs of experimentation leaders like Airbnb, LinkedIn, Netflix, and Uber, numerous articles[12] describe custom-built, in-house experimentation platforms. These systems benefit from being tightly integrated with their business' tech-stack and dev processes. They also natively integrate with modern data infrastructures, such as cloud-based data lakes and data warehouses. There's no need to worry about discrepancies between various third-party tools and business MI because it's all the same data.

Fig. 2 'Critical Experimentation Platform Components in the context of a modern data stack'

original image by Davis Treybig[11], used with permission

Other attributes that differentiate these platforms from traditional A/B testing tools are:

- Automation - from advanced statistical processing (e.g. outlier capping, error corrections and variance reduction – see CUPED[13]) to real-time monitoring and automatic stopping of damaging tests

- Self-service capability - allowing non-technical users to run their tests without external help, e.g. Netflix's Shakespeare[14] tool

- Comprehensive instrumentation and management tools – in-depth event logging, debugging, conflict detection, test tagging and searchability.

Of course, not everyone has the budget or skills to build an in-house platform. However, you can use these features as guiding principles. If you tightly integrate tests with DevOps, your data joins nicely with business MI. If your results are widely shared and generate healthy discussion, and if you can test what you want, how you want and be sure your data isn't skewed somehow, you've already achieved a high level of technical maturity.

*Client-side: Everything happens in the user's (client's) browser with client-side testing tools. The underlying application is ignorant of everything the client-side tool is doing. Any changes to the underlying application can easily break client-side tests built upon it. However, it's often faster and easier to test more minor changes this way.

If you’re building your own platform...

The “Experimentation Evolution Model”[15] by Fabijan, Dmitriev, Olsson, and Bosch (diagram found here) is one of the most well-researched and often-cited maturity models out there. If you’re looking to build an in-house experimentation platform akin to those mentioned above, this is the model for you. However, most CRO programmes start much smaller, with third-party tech and limited resources, so this is where the Fresh Egg CRO Maturity Model begins.

Introducing the Fresh Egg CRO Maturity Model

Now we’ve got a better grasp of what maturity means, let us introduce the Fresh Egg CRO Maturity Model. Here’s a quick summary of how the model and its accompanying Maturity Audit works:

- We categorise the model into four main “maturity pillars”, each with sub-categories (see fig. 3 - the four maturity pillars)

- We’ve also included a final category called ‘Impact’, which helps you judge how your programme is performing. Getting a 5/5 score here is the goal.

- Across each of these pillars and sub-sections, you can score from 1 to 5, ranging from ‘Beginning’ to ‘Leading’. (see fig 4 – the five steps to maturity)

- Scoring: You can use the framework diagram below to score yourself. However, we recommend taking our complete CRO Maturity Audit to get the most from our model. We conduct an in-depth survey and follow-up workshops to help you build a long-term maturity plan with clear actions in this bespoke service.

To learn more about our in-depth maturity survey and our follow-up workshops, with which we’ve helped numerous organisations feel free to jump straight to our CRO Maturity Audit page.

The Four Pillars of CRO Maturity

Fig 3 - the four maturity pillars

When trying to answer what CRO maturity means earlier, we identified that Leadership and Velocity play a critical role in the maturity journey. However, looking through industry literature, discussions about maturity tend to follow a few common broader themes, which are:

- People & Skills: who do you have available to support experimentation activity? Are they dedicated resources, what skills do they have and how experienced are they? Is experimentation owned by just one team or more widely distributed throughout the business? Do you have data science capability?

- Strategy & Culture: Do you have a company-wide culture of reliable, scientific decision-making? Do your programme’s objectives align with your organisation’s? Are your leaders bought into this culture, or are you fighting an uphill battle?

- Tools & Technology: Do you have the tools and technology to enable testing and measurement at scale across your entire tech stack? Are you using a third-party client-side A/B testing tool, or do you have something more integrated? Do you struggle to join data from multiple sources, or does everything run on a single-source-of-truth database?

- Process & Methodology: Are you operating an experimentation programme with efficient, robust processes? Are you adhering to statistical best practices? Do your methods produce reliable, trustworthy results? Can non-technical colleagues easily set up and run their experiments?

The Maturity Journey: powering up and staying fully charged

The journey to maturity isn’t always linear. That’s why we like to use the analogy of a charging battery. Each of the above maturity pillars is like an individual battery cell that requires its own charge. If you neglect any one of those, you can quickly lose control and go backwards.

Fig 4 - The five steps to maturity

In the flywheel article referenced earlier, the authors describe the maturity journey as a continuous, iterative improvement process. To move through each phase of maturity, you must turn the wheel, but, most importantly, (you must) keep it spinning. This action is what keeps those batteries charged, propelling you along your maturity journey. We’ve recreated their flywheel diagram below:

Fig 5 - The A/B Testing Flywheel, Fabijan [2]

Diagram re-created from the original article.

The CRO Maturity Framework

We’ve created the following framework diagram as an A3 poster to allow you to get a rough idea of your maturity up-front. Highlight which statement in each row most closely describes your organisation. That last word is critical. Maturity is something that happens at an organisational level. It’s not about how capable your team is. Remember, a framework diagram is just a tool. It’s what to do with it that counts. Our CRO Maturity Audit goes into more depth and provides a clear action plan for growing your maturity.

Get your copy of the CRO maturity framework

What makes our approach different?

High-functioning agile teams are familiar with self-analysis. Each sprint retrospective offers them a chance to review their work and how they’ve worked. A good CRO maturity model should function the same way. It should lead to clear actions, uncover blind spots, and provide inspiration. As the designers of the CMM[1] said, it should be a “vehicle for exploring” weaknesses and opportunities. The score you achieve and where you sit on the maturity scale doesn’t matter. The real gold is in the detail. That’s why we believe our approach is so valuable.

Our CRO Maturity Audit includes an in-depth survey (with flexible industry and business-specific scoring) and a series of follow-up workshops. We collaborate with your teams to create a long-term maturity plan with clear actions. Please take the next step on your maturity journey today by signing up for a CRO Maturity Audit, or call us now to find out more.

References

[1] Paulak, Curtis et al, “Capability Maturity Model for Software” 1993

[2] The A/B Testing Flywheel, Fabijan et al 2020

[3] Tingley et al – Decision making at Netflix 2021

[4] Toker-Yildiz, McFarland and Glick - Key challenges with Quasi Experiments at Netflix 2020

[5] Julia Rozovsky - The five keys to a successful Google team 2015

[6] Ron Westrum - The Westrum organizational typology model 2004

[7] Wikipedia - Dunning–Kruger effect

[8] Wikipedia - Semmelweis reflex

[9] Netflix Culture

[10] Kohavi, Tang, Xu - Trustworthy Online Controlled Experiments 2020

[11] Davis Treybig - The Experimentation Gap 2022

[12] Davis Treybig - Experimentation Resources

[13] Michael Berk - How to Double A/B Testing Speed with CUPED 2021

[14] Brandall, Xu, Chen and Schaefer - Words Matter: Testing Copy With Shakespeare 2021

[15] Fabijan, Dmitriev, Olsson and Bosch - The Evolution of Continuous Experimentation in Software Product Development 2017

[16] Gibson Biddle - A Brief History of Netflix Personalization 2021