What Google Can Understand About a Brand and What This Means for Marketers

For businesses and their marketing teams, understanding the key factors that determine the ranking of a website in Google’s search results can be a tricky proposition. The algorithms that power the search engine are evolving quickly, and this means that information available on the web can quickly become out of date.

In addition, a lot of the information published on the internet is derived from outdated practices that can now do far more harm than good, or is just plain misleading.

In reality, nobody outside of a limited few within Google can be 100% certain of how the search engine results are calculated because there is simply not enough information in the public domain.

For example, the ways in which the different factors interact and affect each other is not publicly discussed by Google in any significant depth. While Google publicly states that there are “more than 200” ranking signals, this does not account for all of the variations, thresholds and sub-signals that inflate that number significantly into the thousands or even tens of thousands.

Therefore, it’s important to take an evidence-based approach to understanding the ingredients needed to achieve success in Google’s organic search results. Much of the latest evidence indicates that Google’s algorithms now look far beyond the traditional ’checklist‘ of ranking factors that the SEO industry has typically focused on.

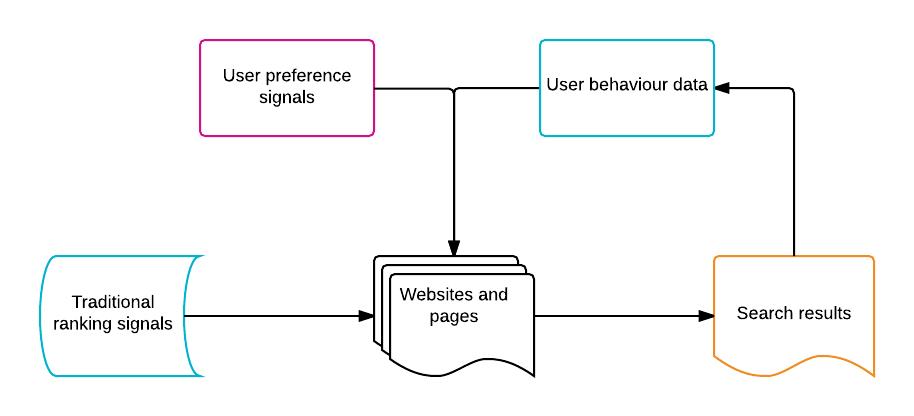

For example, a simplified view of the search landscape that businesses must now contend with is represented as follows:

By traditional ranking signals, we mean all those elements that have typically been focused on by the search marketing industry. These typically break down into three groups:

- Page level factors – typically include the content of the page and the surrounding meta information, such as the page title

- Domain level factors – the architecture of a site, its internal linking structure and a wide range of other elements that apply to a website as a whole

- Offsite factors – traditionally this primarily applies to backlinks but has expanded in recent years to include citations (mentions of a brand within content that are not hyperlinked)

To this, we need to add two other groups of factors that are generally outside of a marketer’s control. Discussion within the search marketing community sometimes overlooks these but the impact they have on the search results warrants greater scrutiny.

- Personal signals – a user’s search history, browser history, and similar factors have an impact on the results they will see, especially if they are logged into a Google account when they search

- Contextual signals – Google can determine a great deal of insight into what a user is looking for by analysing the context of their search. The physical location of the user is one of the more commonly encountered factors

All of these factors are still considered but there is growing evidence that they are now being qualified and validated by large-scale data collected directly from search users. This provides a deeper understanding of how users react to the results that the search engine is providing.

Google patents

A study of the patents granted to Google indicates the general evolution of the algorithms. For example, a patent granted and published in May 2015 explains how website quality scores are calculated by measuring the volume of searches conducted for search terms “that are categorized as referring to a particular site”.

What types of keywords might be understood to refer to a specific website? There are three specific groups included in the patent:

- Site queries – typically understood to include the use of the advanced search operator ‘site:example.com’, which limits search results to pages from the specified domain

- Referring queries – search queries that are determined to include a direct reference to a specific website, such as ‘example.com news’

- Navigational queries – search queries for which a website has received at least a threshold percentage of user clicks from the resultant search results

Here we see that Google is able to gain a clear understanding of the level of interest in a particular brand or website.

The patent states that this data is used to calculate quality scores for the resources (primarily web pages) on the site and that these scores have a direct impact on “how other attributes are used to score resources” on a website.

Search Console data

Google’s Search Console toolset allows website owners to evaluate how their site is performing within the organic search results, and this can also reveal data and insights that are not available via onsite analytics and tracking suites.

For example, it shows examples of how Google is potentially running tests within its search results to determine the relevance and quality of a page.

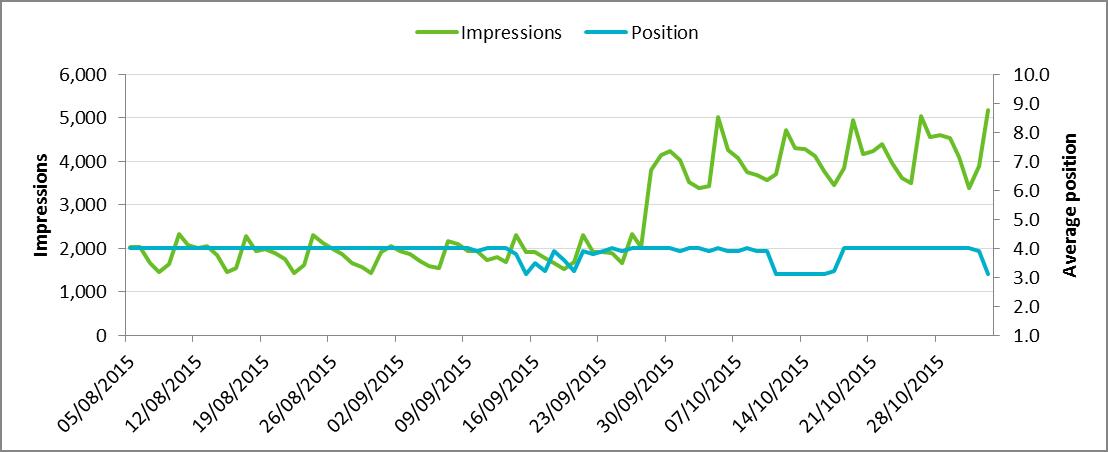

The charts below show Search Console data for a Fresh Egg client in the financial sector for a high volume commercial search query.

Regular landing page

The chart shows an increase in impressions in late September as an industry trend comes into effect, but otherwise a consistent set of data.

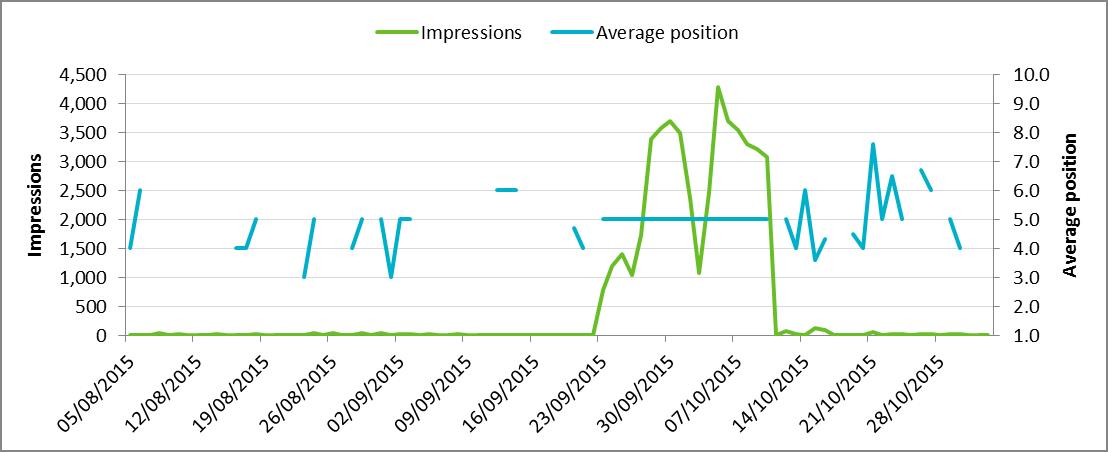

However, Search Console also reveals something very interesting – for a period of about three weeks, Google was showing the site’s homepage quite consistently for this same search query, as opposed to very infrequent impressions both before and after this time.

Homepage

Note that there was no concurrent decrease in impressions for the regular landing page during this three week period. The homepage was being shown with the regular landing page in the search result as a double listing, with the two pages typically appearing at #4 and #5 respectively.

What does the data suggest was happening here?

The consistency of the average position for the homepage during the three weeks suggests the type of control that would be required to maintain the conditions of a test. Compare with the ‘noisy’ average position data both before and after.

What else can we deduce from this?

- The homepage being shown immediately beneath the regular landing page indicates Google is looking specifically at the client site in question

- The double listing gives users a choice between two relevant URLs from the same site, where previously there was none

- The data collected from this may be useful in determining the quality and relevance of the regular landing page by looking at how the addition of a choice affected user behaviour

This is just one example, but Search Console data does reveal others that indicate a pattern of regular testing. When we consider something that Matt Cutts explained in one of his Webmaster Help videos in early 2014, the case becomes even stronger:

"If you make it [developing an algorithm change] further along, and you're getting close to trying to launch something, often you'll launch what is called a live experiment where you actually take two different algorithms, say the old algorithm and the new algorithm, and you take results that would be generated by one and then the other and then you might interleave them . And then if there are more clicks on the newer set of search results, then you tend to say, you know what, this new set of search results generated by this algorithm might be a little bit better than this other algorithm." [Source: YouTube]

While the data in Search Console does not provide any insight that can be used in a tactical sense, simply understanding what Google is doing here has wide-ranging implications for how businesses should be thinking about organic search – again, Google is far more complex and nuanced than a checklist of ranking factors.

RankBrain

The recent confirmation that a new system, codenamed RankBrain, was launched by Google earlier in 2015 highlights the search engine’s evolution towards use of machine learning and big data to train its algorithms.

Little is known about this new system at the time of writing but, from the information Google has publicly confirmed, it seems clear that RankBrain provides a new method of interpreting the meaning of search queries and providing appropriate types of content for them.

The original article published by Bloomberg.com, featuring an interview with Google Research Scientist Greg Corrado, included the following explanation:

“If RankBrain sees a word or phrase it isn’t familiar with, the machine can make a guess as to what words or phrases might have a similar meaning and filter the result accordingly, making it more effective at handling never-before-seen search queries.” [Source: Bloomberg]

The introduction of RankBrain offers further evidence that Google is continuing to add cutting-edge technologies to its search engine algorithms. As it does so, its ability to see beyond even modern SEO tactics increases. As Google advances, so must our understanding of it and our approach to marketing.

Search Quality Rating Guidelines

To guide the human evaluators who carry out manual assessments of pages and websites, Google maintains a document called Search Quality Rating Guidelines. This process of assessments provides Google with a useful way to validate the quality of the search algorithms.

From time to time, copies of this document have been leaked but, in November 2015, Google took the unusual step of openly publishing a copy of this document. The contents give an insight into some of the factors that Google is seeking to understand about a website and its content, and one of these is website reputation. To quote from the document:

"Use reputation research to find out what real users, as well as experts, think about a website. Look for reviews, references, recommendations by experts, news articles, and other credible information created/written by individuals about the website."

The document states that reputation research is required for all websites the evaluators produce an assessment for, and defines the type of information that should be sought out. Some of these include:

- User reviews and ratings

- News articles

- Recommendations from expert sources, such as professional societies

- Recognised industry awards (for example, journalistic awards such as the Pulitzer prize won by newspaper websites)

Conversely, evidence of malicious or criminal behaviour can be taken as evidence of a negative reputation. Evaluators are encouraged to look beyond the reputation information contained on the website itself, and seek external evidence as well.

While the Search Quality Rating guidelines are not a mirror image of the factors used by Google’s algorithms, this again gives a strong suggestion that a website’s reputation is something that is considered important.

What this means for business owners and marketing departments

For businesses, Google’s ability to start looking beyond the technical optimisation and content of a website has profound implications. It is no longer enough to optimise pages and ensure that links are coming into a website from external sources.

- Reputation matters to Google – businesses need to carefully nurture a positive reputation using open, transparent methods, listening to the constructive feedback received from genuine customers and acting upon it wherever possible. Attempting to shortcut the process by supressing the negative, or falsely creating a positive reputation, is likely to backfire – Google excels at detecting patterns of manipulative activity, but also understands that an amount of negative coverage is inevitable for any business (as reflected in the Search Quality Rating Guidelines)

- ‘Offline’ factors may have an indirect impact – for example, consistently poor customer service might have an influence on user search behaviour, and will certainly be reflected in discussions on relevant forums and review sites. Likewise, the quality of a brand’s core product or service will also have an impact

- Brand building is important – growing brand awareness and approval can drive greater numbers of users to search for the brand in Google. While being among the top results for generic keywords is important, a marketing strategy that encourages users to search for the brand directly instead of those keywords (for example, “Brand.com red widgets” instead of just “red widgets”) is likely to achieve more

- Businesses need organic search advocates – while it’s unrealistic to expect every department or team to have a detailed understanding of Google and the way its algorithms work, it is increasingly important they do understand the ways in which their work will impact organic search, and how they can use this to the business’ advantage

SEO can no longer operate effectively in its own silo, divorced from all other business activity. It needs to be integrated into every key marketing or web development decision to ensure that the implications and consequences are understood.

To be successful in organic search, a brand must seek to capitalise on its unique selling points and strive to be the preferred choice of the target audience.

To understand what your business needs to do to achieve success in organic search, please contact us directly via sales@freshegg.com