The Evolution of Search and SEO

By Stephen Jones|2 Jul 2014

What's the goal of SEO?

Many aspects of search engine optimisation have changed over the last two decades but, essentially, the goal has remained the same: marketing a website so that it has good visibility in search engines. As search engine technology has improved and, more importantly, the quality of the user experience for those using search engines has become richer, the various techniques used by SEO agencies have had to change in order to adapt.

A timeline of search engine development

At the heart of SEO is a technical component. In the early days of the internet, many search engines were not particularly good at crawling the web, and so one of the main purposes of SEO was to ‘make up the gap’ and ensure that engines visited and recognised important pages that were not being seen. Although modern crawlers are better at exploring complex URLs and even JavaScript, it’s still entirely possible to drop an entire site from Google’s index with a careless parameter in a robots.txt file.

Once a website had been crawled and registered on a search engine’s index of results, the next step was to rank results in order of relevance to the query. Early versions of this process relied on text matches on the page and meta content, ‘read’ by the crawler.

On-page content factors

Well, you don’t have to be an SEO genius to work out a strategy to take advantage of that mechanism, do you? Very quickly, pages appeared that were stuffed with key terms that attracted high volumes of searches, often keywords that had no relevance to the site or content appearing at all. Pages would sometimes feature footer blocks of text which were just endless repetitions of phrases. Sites that didn’t want to fill visible page text with garbage often used other techniques, such as hiding text in a matching colour to the background (invisible text) or packing meta element fields with kilobytes of content.

This became such a widely-abused tactic that most search engines stopped using the meta keywords field altogether as a relevancy signal. However, deceiving search engines like this was still employed on other sections of site pages, and it wasn’t just small companies doing this: in 2006 none other than BMW got caught using keyword-stuffed doorway pages for ‘used cars’ and found themselves on the wrong side of a Google penalty.

Off page content factors

Keyword-matched search results weren’t a great user experience, and then along came Google with PageRank™, which used off-page backlinks as a ranking factor, in addition to page content, domain and URL signals. By analysing who linked to a site and classifying the web in terms of authority sites, it now became possible to recognise top sites in different subject areas across the internet. This is something Google still use and have developed, with Google Authorship looking to return more authoritative writers in searches for content in their fields of expertise. Combined with a larger crawl index and speedy presentation of results, Google quickly made other search engines (like AltaVista) irrelevant.

Again, this provided several opportunities for a “quick fix”. Links could be exchanged, traded, manufactured, bought and the anchor text used in them targeted to keyword phrases. Much like an athlete using illegal steroids, smaller sites could quickly outstrip the competition and leap to the top of SERPs, overtaking much larger companies which had better on-page content.

Unsurprisingly, this started making a mockery of search results in the same way keyword stuffing had done previously (Eric Schmidt once referred to search results as a ‘cesspool’). Although Google was continually developing with major feature and quality control updates, it was still possible to exploit loopholes through linking – that is until the famous Panda and Penguin updates were released.

Google fights back

Progressively, over months and numerous iterations, these updates identified and re-ranked sites according to good or bad signals from content and backlinks, allowing the spam team at Google to concentrate on looking at tell-tale signals from link networks and other forms of mass-scale manipulation. It is easily arguable that, despite the occasional glitch in certain sectors and niches (sometimes specifically addressed by Google, examples being the ‘Payday Loan’ update), quality of search results improved. A search for a pharmaceutical, for example, will now generally return a site with qualified medical advice on its usage, effects and structure as opposed to affiliates attempting to sell it.

Are quick SEO ‘tricks’ still possible? Of course – but remember, if a site suddenly pops to the top of search, don’t be surprised if Google engineers take a good look at it to wonder why and how it’s got there (and the rest of the world too, with the ‘report’ function). If it’s because the site has worked very hard, built a loyal following and does something that people find useful or enjoyable when they find it in search, then great! If, however, there’s something else involved, then it’s unlikely that this new visibility will be enjoyed for very long.

Where are we now?

Previous SEO techniques focused on the search engine:

Technically: what pages need to be crawled?

Semantically: what’s the important content on this page?

Socially: Who likes and links to this page?

You will notice that one, very important factor tends to be left out of this equation: the customer.

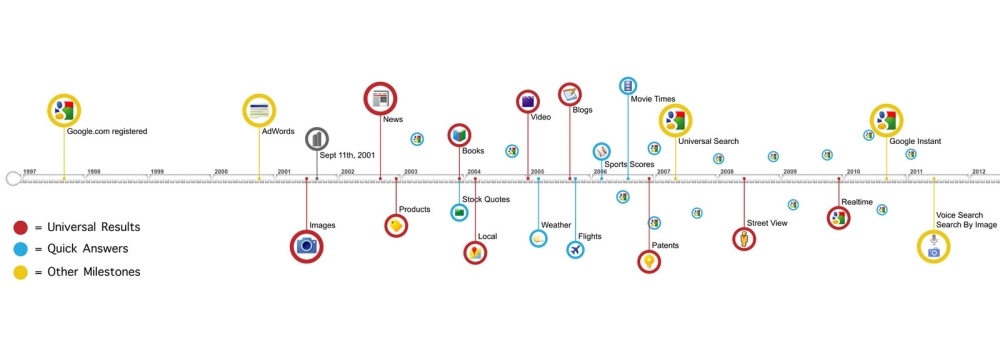

Although all the above will still remain part of search marketing, it’s how sites deal with the user experience that will determine if they cross the finish line first or stagger in behind the front-runners. Here is a timeline of major Google developments up to 2011 (from the official Google blog):

What’s immediately obvious is the number of different methods Google has of capturing and presenting user and behaviour data: from what you read, where you travel, where you live and what team you watch. What lies beyond 2011’s telling cut-off of ‘voice search’ is how all this data will be tied together and presented to users searching online. Sites stuck at the marketing level of keyword matches and “we don’t do social” aren’t going to appear in the new search world. Why should they? The customer market is a vibrant, varied and constantly changing place. It no longer needs to pick from the top 10 sites suggested by search engine results, it can decide – based on its informational needs – which sites provide the most useful and interesting content. . . and they will share this with others when they find it, too.

If all this sounds daunting, it doesn’t have to be. You can, as Dr David Sewell points out in his presentation on Google as Predator, just pay Google to be included in these market places (and realistically that is the only way you can be visible in some of them). Or you can evolve, stop thinking so much about tricking a search algorithm, and discover ways to find and delight your customer audience.

For example, who would be impressed by news that you have launched a new bus stop advert? Pepsi Max thought people would be if their advert was awesome:

At Fresh Egg, we are taking the time to alloy the ‘traditional’ checklist of digital marketing to a detailed discovery process about a client’s customers, where and how they search, and what kind of content they look for and engage with when on a website. You can read some of our case studies here, and what difference our clients think this approach makes for their business. In addition to detailed technical audits of a website and its performance, we look at the opportunities in wider digital markets and recommend site content and campaigns required to cater for the needs of those audiences. Once introduced to a website, performance and interaction can be monitored and tested with ongoing conversion rate optimisation to ensure both businesses and customers are getting what they need.

So the goal of SEO has changed; although it’s still marketing, the focus is now firmly on the customer (where it should always have been), and these days may not even involve a website, concentrating on inbound marketing across other, popular customer channels. The results when it is done correctly however, can still be as spectacular.