Get results you can trust

Our in-house strategists, analysts and A/B test developers will validate your set-up at every stage, so your data is clean and reliable.

Test new ideas before you build them with specialist A/B test developers and implementation support. Our experimentation teams build rock-solid tests for results you can trust.

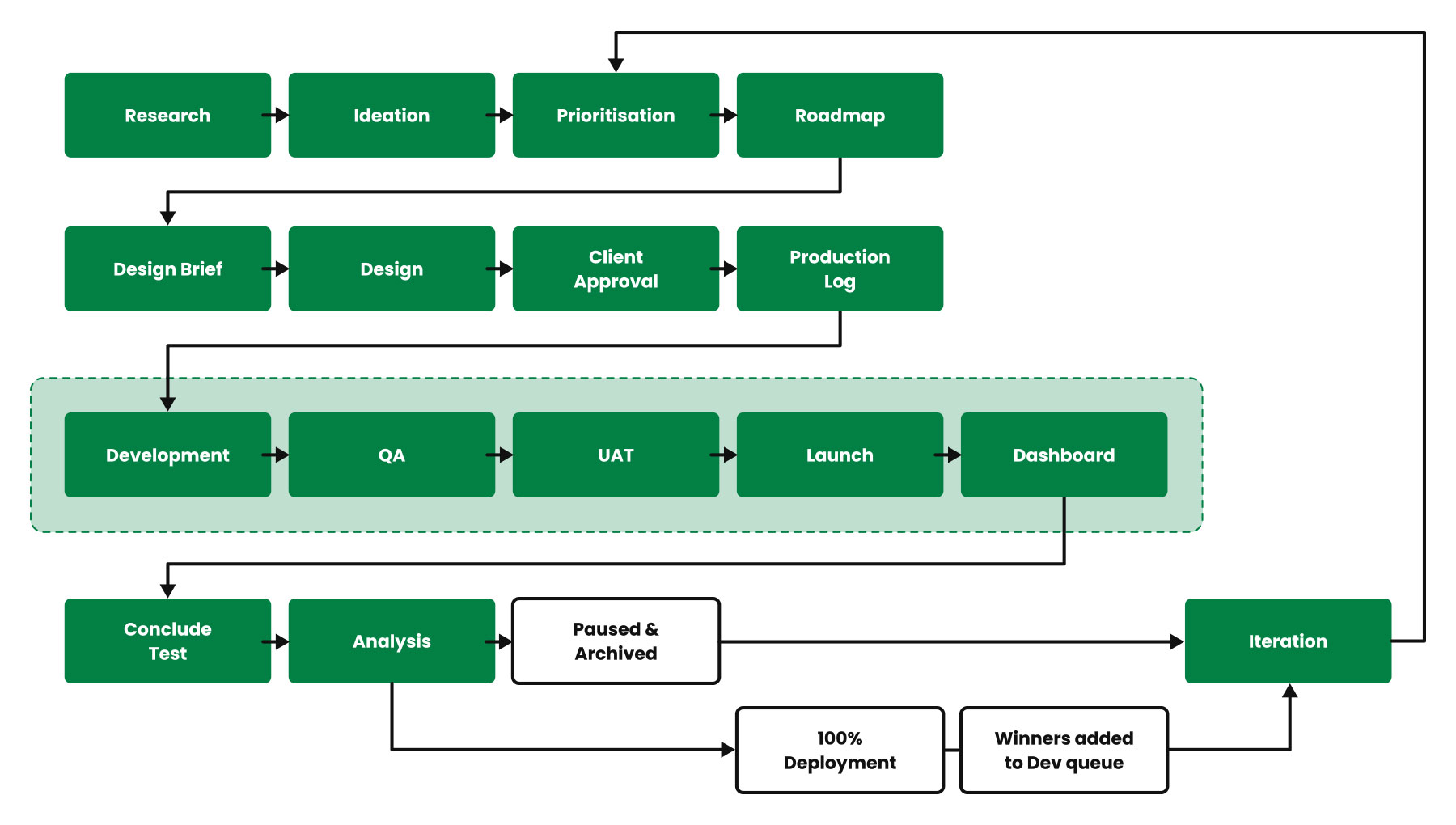

Struggling to build reliable A/B tests? We’ve optimised hundreds of digital experiences over the past decade, establishing solid formulas and water-tight workflows. That means we can produce reliable tests in record time, increasing your ROI from experimentation.

Our in-house strategists, analysts and A/B test developers will validate your set-up at every stage, so your data is clean and reliable.

Implementing A/B tests faster gives you more opportunities to discover extra revenue. That means you can transform your ROI from CRO.

By demonstrating the power of evidence-led thinking, our A/B testing team serve as a “centre of excellence” for your organisation.

Giving your teams access to high velocity testing is the best way to demonstrate the power of experimentation and evidence-led thinking. Our A/B testing team can provide a resource for designers, product teams, advertising strategists, and any other stakeholder with a measurable KPI.

Building a client-side or server-side test requires specialist development skills. Coding the experience correctly helps to prevent flicker and reduces the risk of unexpected bugs. Our development team have decades of test-building experience, so you get flawless test variants and data you can trust.

"I have never felt more confident in a site testing strategy. I have presented these results to the board and 70 branch managers, and they have been met with applause (literally!)."

Anna Macleod, Marketing Director at KFH

Our step-by-step development process is clearly documented in play books and checklists that help you keep track of our progress. Giving you oversight of our work means we can adjust to your requirements and find more efficient ways of working.

"Fresh Egg quickly achieved impressive results, allowing us to further invest in their CRO services and increasing the pace of testing and UX improvements on our website."

Sarah Leach, Head of Marketing and eCommerce at Marshalls

To run a high-velocity testing programme you need to make a lot of decisions about technical solutions and strategic priorities. You may also need training on specific aspects of A/B testing, such as how to apply statistical methods effectively. Our award-winning CRO strategists can provide strategic support on every aspect of your testing programme.

Our clients often have fantastic ideas for optimising their website, but don't have the time or the know-how to test them. We can turn a small list of big ideas into a promising roadmap, then implement tests in a way that maximises the ROI from the winning solutions.

Seb Larsson, Senior CRO Strategist at Fresh Egg

Loading

Our A/B testing team can give you the power of experimentation, turning your ideas into proven opportunities. Tell us about your project and we will set up a strategy call with one of our CRO team.

Let's have a chat about it! Call us on 01903 337 580