How to Deal With Bot Traffic in Your Google Analytics

By Julian Erbsloeh|13 May 2015

Update - 13/05/2015

Since publication of this post, referral spam has become a more serious issue, affecting most websites Fresh Egg audits and works with. The number of spam referrers is growing steadily, almost on a daily basis.

At the time of writing the original post (23 February 2015), the number of known spam referrers was so great it needed to be broken down into two filters. Today, there are five filters that need to be implemented to exclude all known spam referrers – and the numbers are still growing. The pressure on Google to do something about this is growing but, at this stage, there is no official response.

The closest to a tool that automates the process of setting up filters to exclude referral spam that we have found is this one from Simo Ahava. However, we have not been able to successfully test it because of the large number of accounts we manage.

The best way to tackle referral spam is to set up (manually or using the above tool) a series of exclusion filters. Details of how to do this can be found below. There’s also a link to the resource we use to stay informed of all the new spam referrers. As well as these exclusion filters, you should also ensure you have a hostname filter set up that includes only valid hostnames.

Since I originally wrote this post, I have also come across a new kind of GA spam: event spam.

Do not be tempted to visit the website advertised in this fake event, as it may plant malicious software on your computer.

![]()

If you have a robust hostname filter in place, you won’t have this issue. If you see the above event (or a similar one that you clearly haven’t set up), setting up a hostname include filter should resolve the issue.

It’s worth noting that hostname filters can be destructive and accidentally-excluded data cannot be recovered. So, if you’re unsure about how to set up a hostname filter, do get in touch.

------------------------------------------------------------------------------------------------------------------------------------------------------------

Non-human traffic from robots and spiders to your website is not new but so-called ‘bot traffic’ can be a nuisance in many ways. This post will show you how to identify bot traffic in your Google Analytics (GA) account and how you can deal with it.

What is bot traffic and where does it come from?

Search engines use bots to crawl websites and index the web so they can return relevant search results to users, we all know that. Bots used by all the major search engines do not show up in GA and we don’t want to block these bots from crawling our website.

But the number of bots that execute JavaScript is steadily increasing, bots now frequently view more than just one page on your website, and some of them even convert on your goals. As bots become smarter, we too need to become smarter to ensure this traffic does not cloud our judgement when we make important business decisions based on the data in our analytics platform.

Competitors and aggregator sites

Bots are also used to search websites for information such as prices, news, betting odds and other content. This means that there are certain sectors that are more prone to bot traffic than others. Fresh Egg has recorded higher than average bot traffic in travel, gambling, recruitment, retail and financial services sectors. This is most likely due to bots from competitor sites scraping information or from market aggregators which scrape websites to gain information about prices of certain products across a wide range of providers.

Site monitoring services

Site monitoring services like Site Confidence that track your website’s up-time and performance also use bots to ping the site on a regular basis, measuring response codes and times. These types of bots can also be included in your GA and can have a dramatic impact on your data.

Spam referral

Spam referral traffic is another form of bot traffic that has seen an increase lately. Traffic from spam referrers such as Semalt, hides in your referral traffic and skews your performance data just like any other bot traffic.

Malicious

And then there is malicious bot traffic which isn’t actually traffic but automatically generated hits sent to your GA account to mess with your data.

Why is bot traffic a problem?

Traffic from bots is generally low quality traffic that will skew your aggregated data.

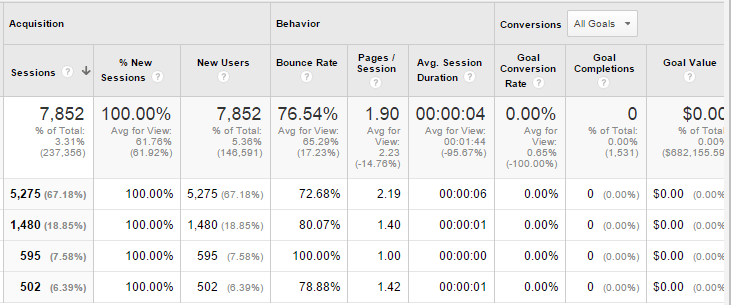

For example, bot traffic will generally be 100% new, view one to two pages on average and have a very low average session duration. What’s more, bot traffic will usually not convert on any of your goals.

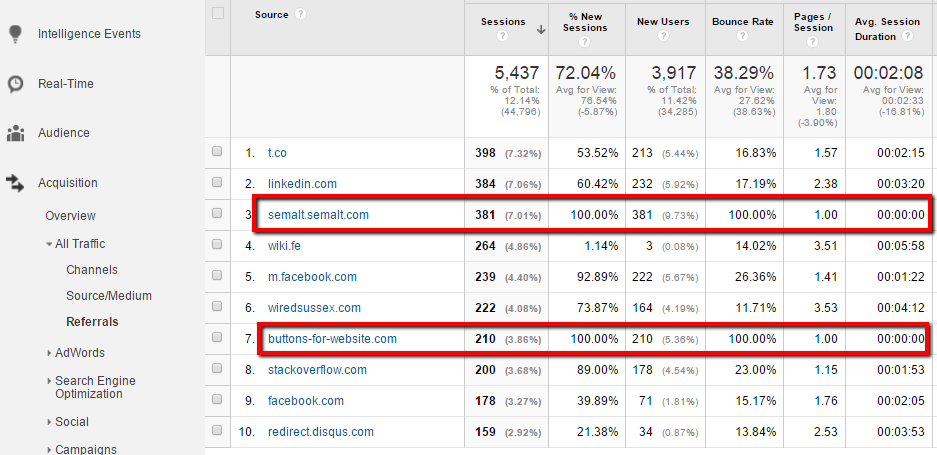

All four traffic sources in the above screen shot are from potential bots but note how they differ on ‘bounce rate’, ‘pages /session’ and ‘average session duration’.

In some cases, bot traffic can also show a bounce rate of 100% as the bot only visits one single page. This is often the case with site monitoring services.

As a result, bot traffic not only inflates your session count, it also dilutes some of your key performance metrics, such as engagement and your conversion rate. In summary, if you have an issue with bot traffic, you cannot trust your data.

Bot traffic also adds to the load on your server so there is a chance it impacts the user’s experience by adding to page load times.

Top tip: Check your data regularly for potential bot traffic and put a process in place to deal with it. Bot traffic renders your data inaccurate and may lead to poor decision making.

How to identify bot traffic in your GA

The first place we usually check for unwanted traffic during a GA audit is the hostname report. We have recently dedicated a whole post to setting up a hostname filter to exclude traffic from unwanted sources.

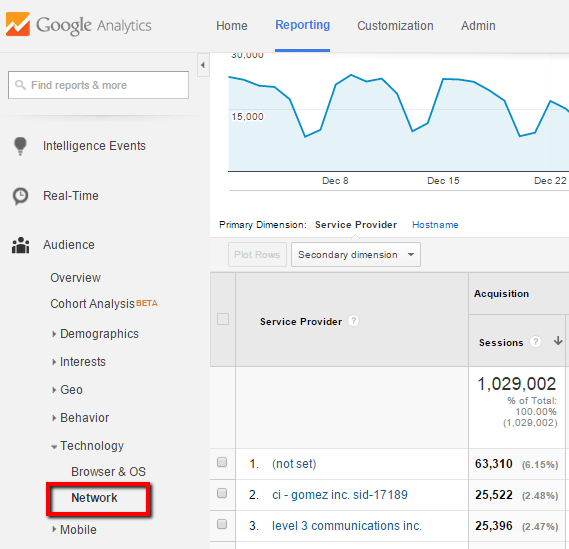

Next, we look at the service provider report which can be found under Audience > Technology > Network:

Now, what are we looking for in this report? Most bots have one thing in common – their sessions are 100% new as GA cannot stick a cookie on them to remember them when they next hit the site. So, service providers with a very high percentage of new sessions and low engagement metrics such as ‘pages /session’ and ‘average session’ duration are suspicious.

To add further evidence to the case, you should check conversion rates – bot traffic rarely converts, especially if the goal requires data entry such as form completion or a purchase.

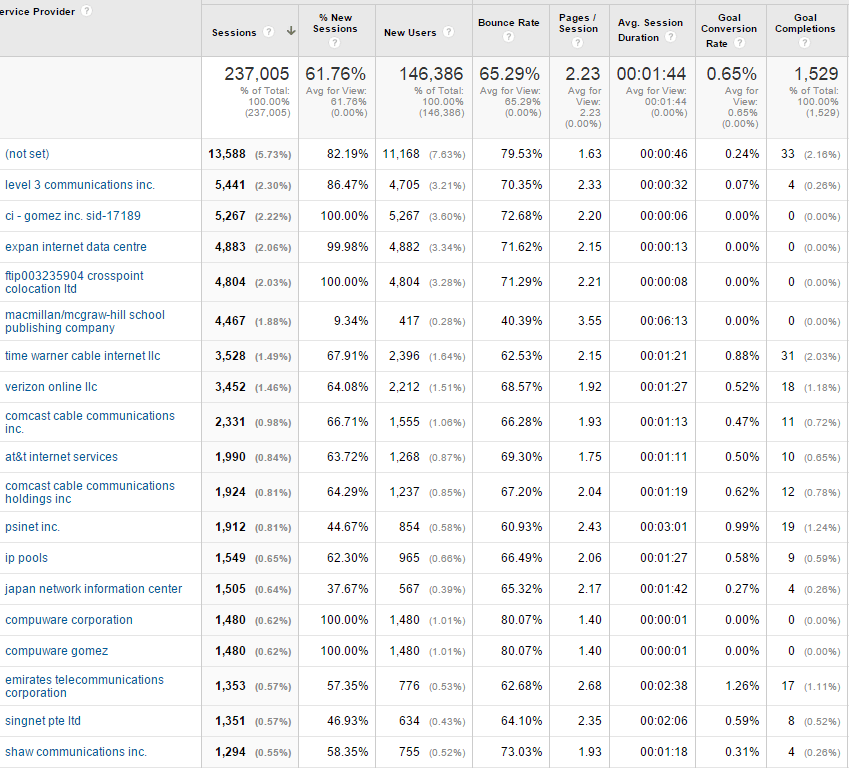

Let’s see if you can spot the potential bots in this next screen shot:

The above table contains a number of potential bots which stand out with a very high percentage of new sessions and very low average session duration. Can you spot them? Try to find at least five. The answer can be found at the bottom of this blog post.

This visual method is of course very crude and will only spot the most obvious bots. However, it allows you to quickly see if you have a serious issue which will help you decide what you do next.

Do not be surprised to find apparent bot traffic from service providers like Amazon inc. and Google inc. – these are usually not bots sent by said companies but merely bots run via their respective cloud servers in an attempt to disguise them. Treat these with just as much suspicion as any other service provider sending low quality traffic.

So, how are we going to deal with all this bot traffic?

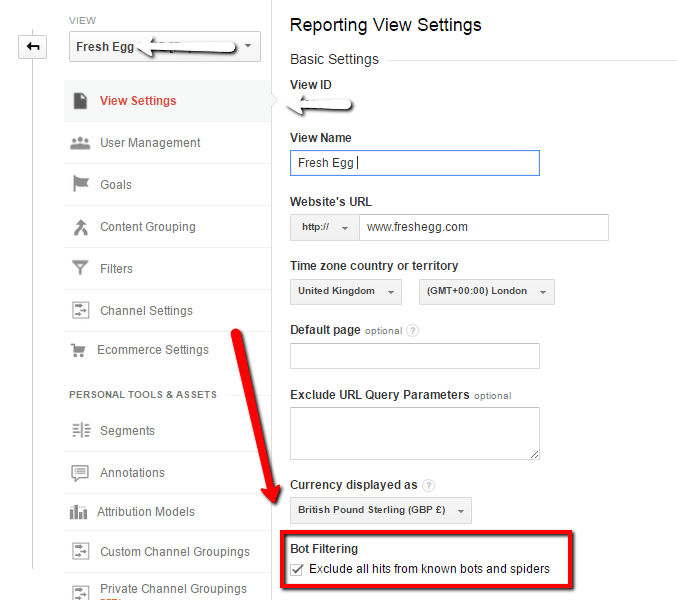

GA bot filter option

GA introduced a bot filter option a little while back which can be activated on view level via the settings. This bot filter excludes all known bots and spiders listed by the Interactive Advertising Bureau. The problem with that list is that it is very expensive which means that by ticking this box in GA we are excluding a lot of traffic without any visibility on what we are actually excluding.

And we do want to know which bots are being excluded so that we can ask our developers to block these bots from accessing our site. Excluding bots from GA only hides the issue, it does not fix it.

Top tip: Before applying the native GA bot filter to your reporting view we would always suggest you apply it to a test view first. Ideally, the test view is identical to your raw backup view so that you get a clear picture of the filter’s impact.

Once applied to the test view, you should collect a reasonable amount of data – no less than a week. And then, by comparing the data in your test view with your backup view, you will immediately see the impact of the filter.

We have seen a variety in the level of bot traffic from below 5% up to 40% in some industries. If the impact is below 5% we will apply the bot filter to the reporting view without much further investigation. However, if the impact is significant we will want to know which bots are sending all those hits so we can block them from accessing the website via the .htaccess file.

To dig a little deeper into the nature of your bot traffic, download the CSV file of your service provider reports for the test view and your backup view. Paste them both into the same excel document and use the VLOOKUP function to identify the service providers that show the greatest discrepancy in sessions between the two data sets. Pass the list of top offenders onto your developers and ask them to identify and block those bots from accessing the site.

What if you have more bots?

The native bot filter may not catch all the bots that are pestering you, so you may need to become a little more creative.

If you still find suspicious traffic in your reports after you have successfully applied the bot filter, you still have the option to create a manual filter. Some analytics platforms such as Omniture, capture a visitor’s IP address – this makes it much easier to identify the source of the fake traffic and allows you to accurately exclude it. In GA, the most useful dimension for this is the service provider.

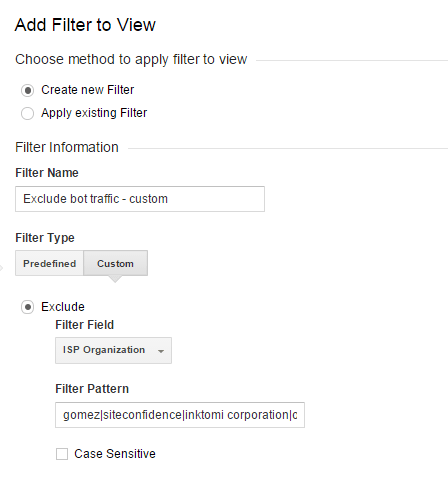

Creating a custom bot filter

- Follow the above steps to identify the service providers that are responsible for sending low quality traffic

- Build a regular expression that captures all of them (test it thoroughly using a service like regexpal)

- Set up an exclude filter on ‘ISP Organisation’ – the service provider in your test view

- Collect some data, compare to the backup view and then apply to the main reporting view if you are happy with it

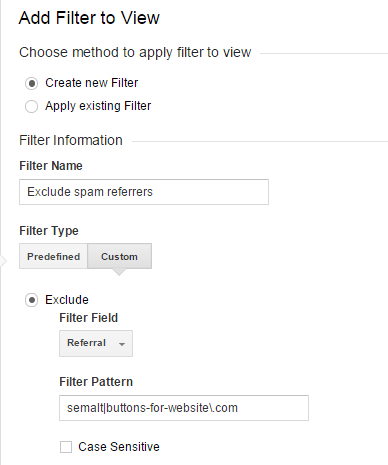

How to deal with spam referrers

Identifying spam referrers in your data and excluding them follows a very similar pattern to the one explained above.

Visit the Acquisition > All Traffic > Referrals report and look out for sources sending very poor quality traffic. The two highlighted in the screen shot below are serial offenders which we find in almost every GA account we audit:

If you are running on the latest version of the GA tracking code Universal Analytics, youhave the ability to exclude unwanted referrers via the referral exclusion list. The documentation on how the referral exclusion list treats sessions is a pretty unclear and there seems to be a consensus in the industry that adding a referrer that has no previous campaign associated to it will start to show as a 'direct' visit once added to that list.

The best solution to exclude the traffic of these spam referrers is to set up a simple exclusion filter:

However, this can turn into quite an admin task as new spam referrers appear over time.. Before you wreck your brain about how to stay on top of all the spam referrers, quantify the problem so you can make a call about whether it is worth the worry. In many cases, spam referral traffic only makes up a very small proportion of overall traffic. And as always, it is worth reiterating how important it is to thoroughly test any filters you add to your data, whether they are include filters, exclude filters or native filters that can be applied with a tick box. But remember data excluded by a filter cannot be recovered.

A maintained list of spam bot referrals can be found here.

Top tip: Always annotate all changes affecting the data in GA so that all users of the data are aware of the changes. This is especially important when comparing data from before the change was implemented.

Tackling bot traffic in your historic data

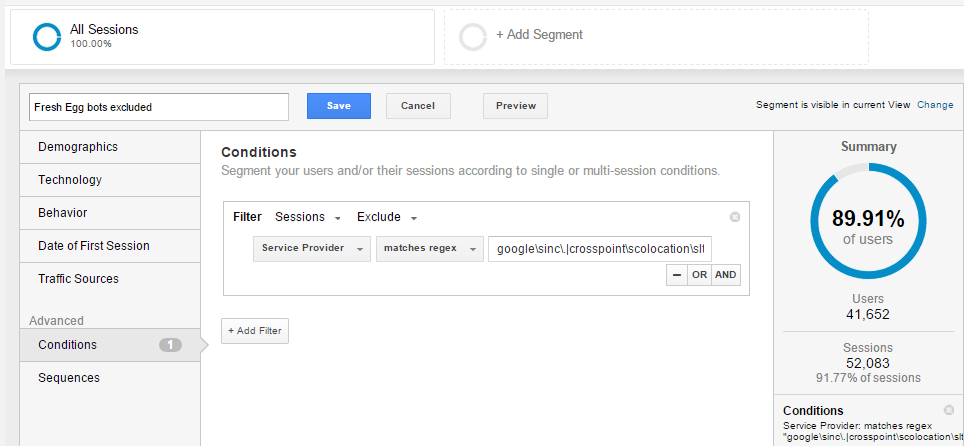

The limitation of the aforementioned filters is that they do not work ‘backwards’ – meaning they will not exclude bot traffic that has already been collected in your data. If you want to analyse historic data but you know it’s riddled with bot traffic and cannot be trusted, you can create a custom segment to exclude bot traffic.

This method will only be as effective as the regular expression you can create to capture rogue service providers but it’s a good start.

Again, you will first need to create a regular expression to capture all the main offenders. Then, create a new segment as follows:

With the neat new ‘segment summary’ feature in GA you can immediately see how much traffic will be excluded by applying your bot filter.

Save it and use it for all your historic reporting and data crunching.

How to set up an alert to inform you of new bots hitting your site

You can use intelligence events to alert you of new traffic that has the typical footprint of bot traffic.

You can read about creating automatic bot alert emails from web analytics expert Dan Barker.

However, it is worth remembering that not all bots have a 100% bounce rate, the majority of bots we used as examples in this post viewed around two pages which means the advanced segment logic described by Barker would need some adjustment.

Ultimately, bots and how they behave differs across websites from one industry to another. The challenge is to identify the behaviour pattern, to isolate it and to then deal with it.

We would love to hear from you – what issues have you had with bot traffic in GA, has the native bot filter option worked for you? If you feel that you simply cannot trust your data, get in touch with Fresh Egg to discuss your options and how we can help.

Answers to ‘spot the bot’: Ci – Gomez inc. sid-17189, expan internet data centre, crosspoint colocation ltd, Compuware corporation, Compuware gomez